Original source can be found

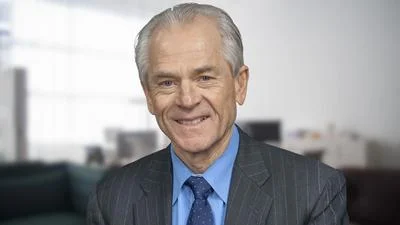

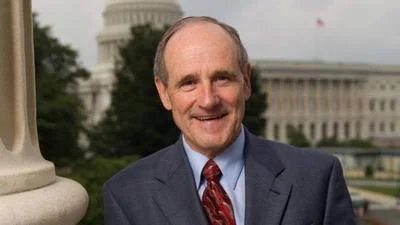

Today, at a Senate Homeland Security and Government Affairs Committee hearing to examine social media’s impact on homeland security, experts confirmed the need for Ranking Member Rob Portman’s (R-OH) bipartisan Platform Accountability and Transparency Act (PATA). The legislation will require social media companies to provide vetted, independent researchers and the public with access to certain platform data.

All three of the witnesses on the panel, Alex Roetter, former Senior Vice President of Engineering at Twitter; Brian Boland, former Vice President, Partnerships Product Marketing, Partner Engineering, Marketing, Strategic Operations, & Analytics at Facebook; and Geoffrey Cain, Senior Fellow for Critical Emerging Technologies, Lincoln Network, agreed the Platform Accountability and Transparency Act (PATA) is a critical step forward to ensuring more transparency at the social media platforms.

In response to Portman’s questioning, Mr. Cain also affirmed that under Chinese law, TikTok has a legal obligation to allow the Chinese Communist Party to access U.S. user data.

A transcript of the questioning can be found below and videos can be found here and here.

Portman: “Thank you, Mr. Chairman, we’ve got a good group of members here, so I’ll keep within the time, and I want to focus on TikTok first. And we’ve talked about TikTok being the most popular social media app in the United States. I also think it poses a risk to our national security, and I want to dig deeper into that today with both of these panels, this one, and then when the TikTok representatives are here later. My understanding is that under Chinese law, the Chinese Communist Party can access data of tech companies that are run out of China or have parent companies that are run out of China. Both ByteDance and TikTok have offices, and they have employees in Beijing. So, Mr. Cain, under Chinese law, does TikTok have a legal obligation to give U.S. user data to the Chinese Communist Party?”

Geoffrey Cain, Senior Fellow for Critical Emerging Technologies at Lincoln Network: “Oh, yes, absolutely. TikTok executives will, under Chinese law, face a minimum of 20 days detention if they refuse to turn over data on anyone in the world. And this could be anybody in China, anybody who’s traveling through China, through Hong Kong. This is a documented legal situation, and it’s not something that TikTok, despite claiming to be an American company, can avoid. I’d also like to point out that TikTok does dodge this question frequently by trying to point out that it is run by a Cayman Islands holding company, a shell company, essentially. This is a red herring to distract from the issue at hand. So the American company, TikTok, and the Chinese company, ByteDance, both report to this Cayman Islands shell company. The company has never said how many people actually work for the shell company, the holding company. But we do know that the CEO of ByteDance and the CEO of TikTok are the same person. So this is listed on the Cayman Islands registry. The CEO is the same person running the ByteDance company in China, according to their website.”

Portman: “Let me dig a little deeper, because we’re going to hear from TikTok later, and based on the testimony we’ve received from them in advance, I think they are going to say they have not provided data to the Chinese Communist Party. Even if CCP requested data, they said they would not share it with them. Again, does China need to make a request to access this data, or does China have the capability to access it at will?”

Mr. Cain: “I’m not aware of the Chinese government having the ability to simply open a computer and access it at will. It would usually happen through somebody in ByteDance or in TikTok. This has already been demonstrated and documented. So there was a BuzzFeed report that came out a few months ago which contained 20 leaked audio files from internal meetings at TikTok in which TikTok employees said that they had to go through Chinese employees to understand how American data was being shared. It also pointed out that employees were saying that there is an individual in Beijing who is called the Master Administrator. We don’t know who that is yet, but this person, according to them, had access to all data in the TikTok system. So when they say that this data is being kept separate, it’s simply a point that’s been disproven already because we have documentation that shows that the data has been shared extensively.”

Portman: “Okay, we’ll have a chance to talk to TikTok about that. But I appreciate your work on this and your testimony today. We have calls for us to legislate more in this area generally. We talked about that earlier, regulations, legislation. My concern is that we really don’t know what’s behind the curtain, the black box, so to speak. We proposed this legislation called the Platform Accountability and Transparency Act to require the largest tech platforms to share data, again with vetted, independent researchers, and other investigators. So we know what’s happening with regard to user privacy, content moderation, product development. We talked about the bias that I believe is out there in social media today with many of the companies and other practices. So, Mr. Boland, you talked a little about this in your testimony. I see in your written testimony you said, ‘To solve the problem of transparency, we must require platforms to move beyond the black box with legislation like the Platform Transparency and Accountability Act.’ Can you explain why that legislation is needed and how it would be used?”

Brian Boland, Former Vice President of Partnerships Product Marketing, Partner Engineering, Marketing, Strategic Operations, & Analytics at Facebook: “Thank you, Senator. Yes, I believe that the Platform Accountability and Transparency Act is one of the most important pieces of legislation that is before you all. It’s not sufficient because we have to address the incentives that we’ve been talking about. But to begin with, we are at a point where we’re supposed to trust what the companies are telling us, and the companies are telling us very little. So I think Facebook, to their credit, is telling us the most, but it’s kind of like a grade of a D out of like an A through F grading system. They’re not telling us much, but they’re telling us more than everybody else, especially YouTube and TikTok. In order to understand the issues that we’re concerned about with hate speech and the way that these algorithms can influence people, we need to have a public understanding and a public accountability of what happens on these platforms.

“There are two parts of transparency that are very important. One is understanding what happens with moderation. So what are the active decisions that companies are taking to remove content or make decisions around content? There’s another critically important part that is around what are the decisions that the algorithms built by these companies are taking to distribute content to people? So if you have companies reporting to you what they’d like to, and I’m sure you’ll hear from them this afternoon, a lot of averages, a lot of numbers that kind of gloss over the concerns. If you look at averages across these large populations, you miss the story. If you think about 220 some odd million Americans who are on Facebook, if one percent of them are receiving an extremely hate-filled feed or a radicalizing feed, that’s over 2 million people who are receiving really problematic content. In the types of data that you’re hearing today, that you’re receiving today, you get an average, which is incredibly unhelpful. So by empowering researchers to help us understand the problem, we can do a couple of things. One, we can help the platforms because today they’re making the decisions on their own, and I believe that these are decisions that should be influenced by the public. Two, then you can bring additional accountability through an organization that has clear oversight over these platforms, whether that be through new rules or new finds that you levy against the companies, you have the ability to understand how to direct them. So today, you don’t know what’s happening on the platforms. You have to trust the companies. I lost my trust with the companies of what they were doing and what Meta was doing. I think we should move beyond trust to helping our researchers and journalists understand the platforms better.”

Portman: “Okay, to the other two, quickly Mr. Cain, Mr. Roetter, do you disagree with anything that was said about the need for more transparency? Just quickly, I’ve got very little time.”

Alex Roetter, Former Senior Vice President for Engineering for Twitter: “I 100 percent agree.”

Mr. Cain: “I 100 percent agree. And I think that this Committee is uniquely poised, given its subpoena powers, to enforce transparency.”

Portman: “Okay, I will have additional questions later. Again, we got so many members here. I want to respect the time, but I appreciate your testimony.”

…

Portman: “To follow up on that particular issue, Twitter Spaces and audio function newer to the platform was allegedly rolled out in such a rush, to your point, that it hadn’t been fully tested for safety. Twitter lacked real-time audio content moderation capabilities when they launched it. We’re told that in the wake of our withdrawal from Afghanistan, that was exploited. It was exploited by the Taliban and Taliban supporters who used this platform to discuss how cryptocurrency can be used to fund terrorism. First of all, is that accurate? Mr. Roetter, I’m going to start with you. And second, is that common for Twitter to launch products that lack content moderation capabilities? You said that sometimes they are under pressure to ship products as soon as possible. Was that why this happened?”

Mr. Roetter: “So it is accurate that they’re under pressure to ship products as soon as possible. And Twitter in particular has a history of being very worried about user growth and revenue growth. It’s not the runaway success that Facebook or Google are, and so there was often very extreme pressure to launch things. And a saying we had is that if you walk around and ask enough people if you can do something, eventually you will find someone who says no. And the point of that was really to emphasize you just need to get out and do something. And again, the overwhelming metrics are usage, and you would never get credit or be held up as an example or promoted or get more compensation if you didn’t do something because of potential negative consequences on the safety side or otherwise. In fact, you would be viewed probably as someone that just says no or has a reason not to take action. There’s a huge bias towards taking action and launching things at these companies.”

Portman: “Yeah. So are you aware of this Twitter Spaces issue and the Taliban having exploited it?”

Mr. Roetter: “That specific example, I’m not.”

Portman: “Okay. Do you think, assuming my example is correct, which I believe it is, that the Platform Accountability and Transparency Act, that PATA would have been helpful there to at least get behind the curtain and figure out why these decisions are being made?”

Mr. Roetter: “So I haven’t read the draft of that, but that said, if my understanding is correct, yes. Having more understanding of what these products do and what sort of content is promoted and what the internal algorithms are that drive both decision making and usage of the products, I think that’d be extremely valuable. And without any of that, I would expect examples such as this to keep happening.”

Portman: “On this trust and safety issue, and specifically the product development and business decision-making processes, Mr. Boland maybe I’ll direct this to you, Meta disbanded its responsible innovation team just last week it was announced. And did you see that?”

Mr. Boland: “I did. It was extremely disappointing.”

Portman: “My understanding is they had been tasked with addressing harmful effects of product and development processes. So you’re saying it was concerning to you. Why are you concerned about it? And tell us about how you interacted with integrity teams while you were at Facebook.”

Mr. Boland: “Yes, I know the people who led that team. Very high integrity, very intentional about Responsible Design of Products, as the team was named. Without that kind of center of excellence that’s helping to shape other teams, I fear that Meta is not going to continue to have that as a part of their conversations. You can think about that group as influencing and indoctrinating if you will, the engineers who come to the company, of how to start to think about some of these issues. It’s less hard-coded into the incentive structure, which I think is a missing element, but would have driven really important conversations on how to ethically design products. Disbanding that unit, and I don’t believe them when they say that they are making it a part of everything, that they’re going to interweave it into the company. That’s a very convenient way to dodge the question, in my view. I don’t believe they’re going to continue to invest in it if it’s not a team. And this comes at a time when Meta is building the Metaverse. We don’t know how the Metaverse is going to play out. I am extremely concerned because the paradigms we’ve seen in the past, that we’ve started to understand around content and content distribution are very, very different in the Metaverse. That’s an area that, if I were this Committee, I’d spend a lot of time really trying to understand the risks of the Metaverse. It feels very risky to me. It feels like the next space where there will be under-investment and without a team like Responsible Innovation helping to guide that thinking, that’s concerning.”

Portman: “Again, same question to Mr. Roetter with regard to how to evaluate these trust and safety efforts in general and specifically something like the Responsible Innovation Team and what impact it’s having, do you think that it would be helpful to have this legislation called the Platform Accountability and Transparency Act?”

Mr. Roetter: “I think so. If we get from that more information to illuminate what these algorithms are doing and what the incentive structures are, that would be extremely helpful. I think today we’re operating in a vacuum and what we see a lot of the public conversation about this is people will cherry-pick one example and use it as evidence of whatever their theory is that these companies are doing, that of course it must be true because here’s one example. The fact of the matter is these companies are so massive and there’s so much content, you can cherry-pick examples to prove almost anything you want about these companies. And without broad-scale representative data from which we can compute what is being promoted and then reverse engineer what the incentives must be, we’re never going to see a change into the things they’re optimizing for.”

Portman: “What are your thoughts on that, Mr. Boland?”

Mr. Boland: “Yeah, I think the issue that we face today is that we have to trust and without having a robust set of data to understand what’s happening and make these public conversations, not company conversations, is critical. Meta will like to tell you that they don’t want to put their thumb on the scale when it comes to algorithmic distribution. The challenge is that these algorithms were built in a certain way, that you’re kind of leaning on the scale already. You just don’t realize you’re leaning on it. So these algorithms today are already doing a lot to shape discourse and to shape what people experience. We don’t get to see it, and we have to trust the companies to share with us information that we know that they’re not sharing. As I said earlier, I think the Platform Accountability and Transparency Act is a critical, critical first step, and we need to do it quickly because these things are accelerating, to understand what is actually happening on these platforms.”

Portman: “Mr. Cain, you have the last word.”

Mr. Cain: “I do believe that there are a number of issues that were addressed here today that will have significance not only for our democracy within America, but the position of America and the world. There’s been major changes that I’ve seen personally, having been in China and Russia and recently Ukraine, in the world of technology, in the world of social media. And my greatest concern is that we are ceding too much ground to authoritarian regimes that seek to undermine and to malign us in whatever way they can. The software that we’re using, the AI, the apps, these are ubiquitous. This is not the Cold War where we had hardware, we had missiles pointed at each other. Now we have smartphones. And it’s entirely possible and quite probable that the Chinese Communist Party has launched major incursions into our data within America to try to undermine our liberal democracy.”

Portman: “Well that’s a sobering conclusion, and I don’t disagree with you, and I appreciate your testimony and our other experts. Thank you all.”