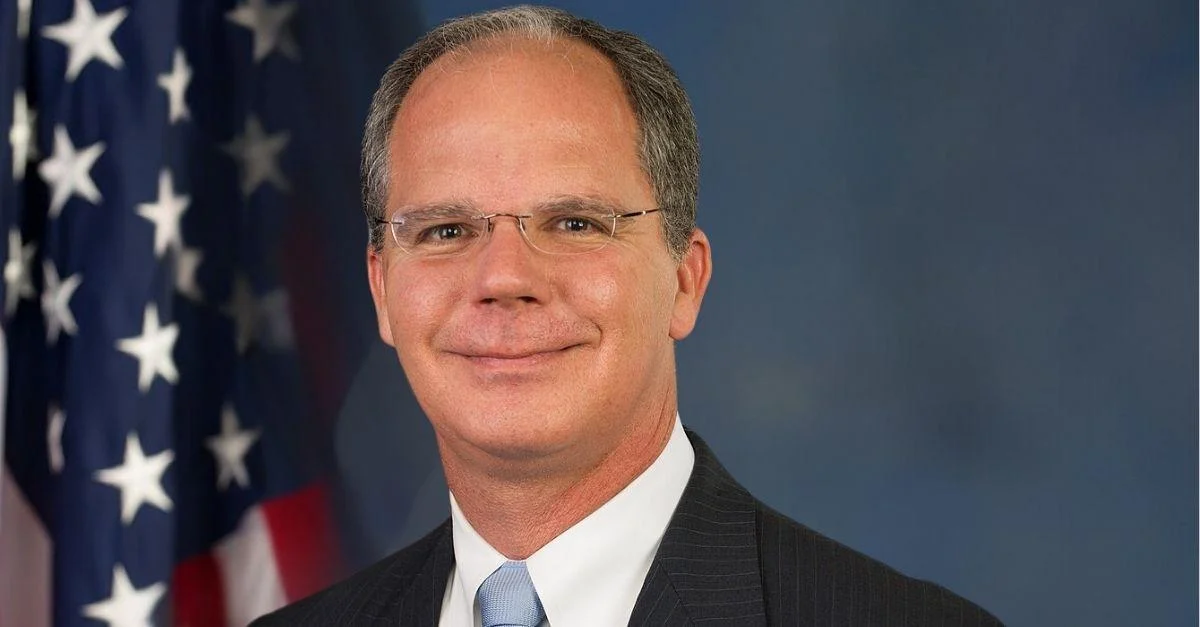

Congressman John Joyce, M.D. (PA-13), who serves as Chairman of the Subcommittee on Oversight and Investigations, opened a hearing titled "Examining Biosecurity at the Intersection of AI and Biology" in Washington, D.C.

In his prepared statement, Joyce addressed the opportunities and risks that come with the convergence of artificial intelligence (AI) and biotechnology. He noted that while this intersection could lead to major advancements in medicine, public health, and scientific research, it also introduces significant security concerns.

Joyce highlighted how advances in synthetic biology have made powerful tools more accessible outside traditional laboratory settings. For example, he mentioned that basic CRISPR gene-editing kits are now available online for less than $300. He also pointed out that advanced AI systems—such as large language models (LLMs) and biological design tools—are evolving faster than current oversight mechanisms can manage.

“In recent years, studies have shown that cutting-edge AI models can walk users step-by-step through complex biological processes, including those relevant to developing or modifying dangerous pathogens. These tools can assist experts in breakthrough research, but they may also enable individuals with far less training to bypass barriers that once protected against accidental or intentional misuse. Some LLMs have even been shown to outperform PhD-level virologists on advanced troubleshooting tasks,” Joyce said.

He added there is early evidence showing AI systems are capable of designing entirely new biological entities: “A recent study demonstrated that an AI model generated multiple synthetic viruses—some with capabilities that researchers previously believed were impossible.”

As a physician, Joyce acknowledged the positive impact these technologies could have on patient care: “AI is accelerating drug discovery, improving protein modeling, and enabling the development of therapies with unprecedented precision.” However, he cautioned about biosecurity risks: “These risks are not theoretical. National security experts warn that adversarial nations—including China, North Korea, Iran, Russia, and others—may seek to exploit AI-enabled biological design tools for malicious purposes. We must take those warnings seriously.”

Joyce raised concerns about whether existing government oversight frameworks are sufficient for these emerging threats. He explained that policies like Dual Use Research of Concern (DURC) might not apply if an AI-designed organism does not fit certain criteria such as being a Select Agent or known human pathogen.

He recognized steps taken by previous administrations but called for ongoing assessment: “The Trump administration has taken steps to keep up with such advancements, but the federal government must continue to carefully assess whether our current safeguards and reporting systems are adequate in an era of rapidly advancing AI technology.”

Joyce concluded by thanking witnesses at the hearing for their contributions in helping Congress address these challenges.